Our Roadmap to Useful Robotics

Jun 10, 2016

To deliver the best hands in the world, we’ve collaborated with the best researchers — but what does it take to reach out of a research oriented market? To solve real world problems using robots? We’re convinced that tailoring a custom solution for each problem is not the way forward. We want the people facing those problems to be able to use our solutions themselves. And we have a roadmap to get there.

A bit of history

Developing the most dexterous hand in the world

1987 — first wooden prototype

It’s been a long journey for the dexterous hand, from humble beginnings as a wooden prototype back in 1987, to our current, award-winning, robot.

Our state of the art dexterous hand now has 24 DOF — with 20 independent movements. The first motor actuated version of the hand was developed in 2000. It also streams the data from hundreds of sensors to the control pc at 1kHz, thanks to a switch from CAN to etherCAT in 2011 — developed during theHANDLE project.

The Shadow Dexterous Hand has also seen lots of evolution on the tactile side: simple pressure sensors, Biotacs, Optoforce, ATI Nanos… Integrating with a wide range of tactiles makes it possible to cover all our customers needs, from the most basic to the most demanding.

Scripted in hand pen manipulation

So what can you do with the Hand? Perhaps the most logical answer is “What can’t you do with your hands?” Which is kind of true theoretically speaking, but it’s not so simple to make it do that.

A research oriented product

In order to get our flagship product where it is, we collaborated with researchers throughout the world. A number of European and UK funded research projects shaped our Hand and its ecosystem.

Shadow’s culture has always been very open. We consider that to build the best robots, we need to share as much as possible with a wide community. On top of that, we’ve always aimed for our customers and partners to be able to work on any part of the system of interest to them — from firmware to high level control. While this drove technology forward, it also means having a complex interface and documentation.

Now reaching towards a broader market

All those years of pushing our hand forward culminated in winning the best of the best of CES Asia in 2016, through the Moley Robotics project.

This highlighted some short comings in using our products in a “real world” case:

- due to its dexterity the hand is a complex tool to use — if you want to exploit its full potential.

- Creating complex tasks can be implemented, but that requires a lot of engineer time. The engineers don’t necessarily know much about the task itself; what if we could move that engineer time over to the people who would normally do the task?

Our roadmap to making a great product useful

A powerful yet simple GUI

To remove the complexity out of setting up a new robot behaviour, we need to focus on a simple GUI that makes it possible to represent potentially complex behaviours in an intuitive way. Block programming seems like a good match for this: by sliding boxes and combining them, the end user can solve their problem without writing code or understanding robots.

The crucial part of this process is to identify the best box set. The keyword here is balance:

- if the boxes are too small — e.g. move this joint to this angle — then they are very easy to use in different situations, but the final “recipe” is too complex to build and understand

- if the boxes include too much — e.g. make me a sandwich — then they will be used in just a few cases.

Behind Boxes

This simple GUI is just the tip of the iceberg. To keep the boxes simple, generic, and useful, we’ll need some robust algorithm in the background. Let’s focus on the two main challenges that we’re facing:

- vision: we need the robot to be able to identify what’s surrounding it. Good object segmentation and recognition is crucial. Other abilities such as safe planning around humans are also necessary.

- grasping: an autonomous grasping box is necessary. The user shouldn’t have to worry about how the robot will achieve the grasp, but instead just tell the robot to grasp this object.

A self improving robot

Hard coding the different library behind the boxes is not feasible. Recognising any object, and being able to grasp them perfectly every time, in any situation is just a dream. We need to help the robot learn from its experience. This is a perfect opportunity to use the cloud to share knowledge between the deployed robots.

Improving vision

Proposed vision pipeline

When faced with an unknown object, the robot can learn the object and add it to a common knowledge base for the other robots connected to the same cloud to be able to recognise it as well.

Improving grasping

When a new object is seen, a variety of grasp planners can be run on it to generate potentially possible grasps. This can be computationally expensive. To improve this:

- run the quickest algorithms on the robot to have a potential list of grasps for a new object

- run more computationally expensive grasp planning algorithms in the cloud to generate new types of grasps for known objects in the background.

- Feed all known grasps into a simulator to test them and improve them continuously.

- When using a grasp on a real robot, evaluate the grasp and feed that into its scoring to refine the grasps.

This idea is being researched by different labs throughout the world. Recently, the university of Washington did some exciting work on it.

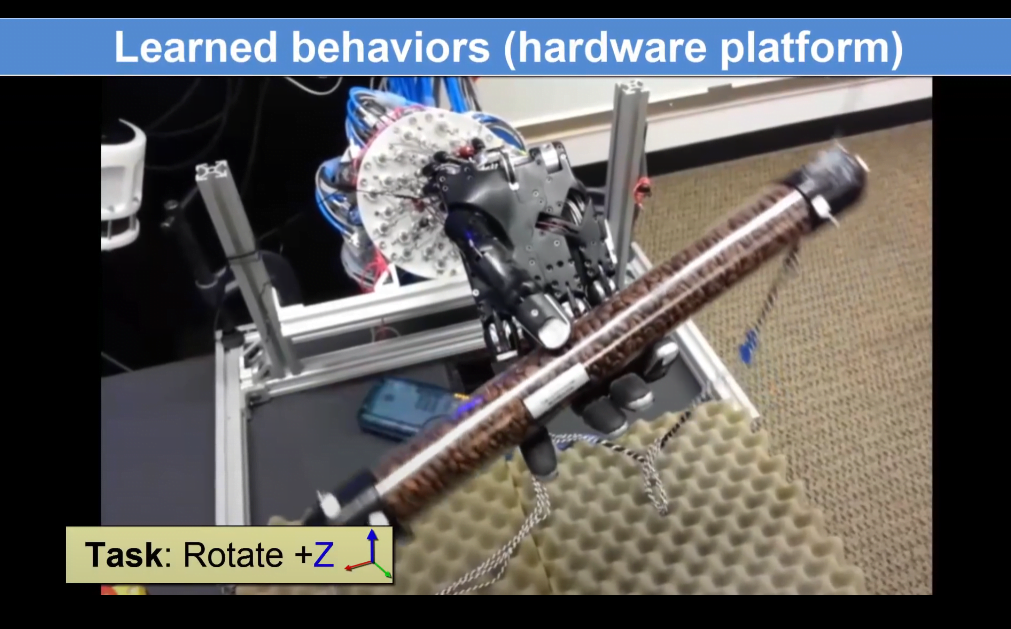

Optimal Control with Learned Local Models: Application to Dexterous Manipulation Vikash Kumar , Emanuel Todorov , and Sergey Levine — ICRA 2016

Final words

There is obviously a lot of work to be done — and a lot of research to convert into product ready algorithms. But our aim is to make it possible to create new robot abilities as easily as decomposing them into logical steps, and therefore make robotics ‘useful’.